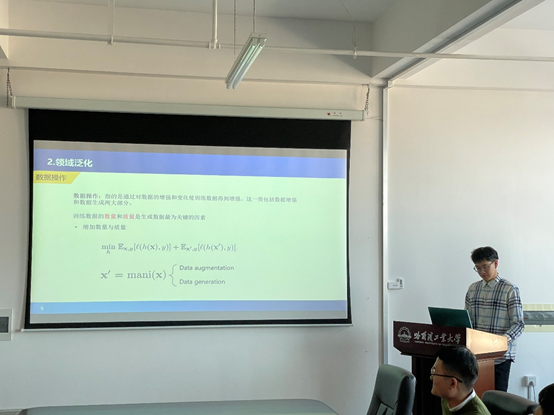

博士研究生代文鑫作《领域泛化技术研究现状》学术报告

一、报告人简介:

代文鑫,电气学院电器与电子可靠性研究所2021级博士研究生,导师为杨春玲教授,研究方向为板级电子系统可靠性预计数字模型研究。

二、报告内容简介:

1.参加人员:郑波恺、胡义凡、孙祺森、赵子川、郭子剑、杨赟、李浩翔、吴丽琴、王浩南等。

2.会议的主要内容等:介绍了领域泛化技术的背景与分类,并根据每类方法展开介绍了相应的方法原理以及适用的情况,最后对领域泛化技术进行了总结与展望。

三、讨论内容:

1.讨论内容:探讨了领域泛化技术在可靠性预计中应用的可能性

2.与会人员提出观点、意见等:该技术具有较强的模型迁移能力,但是应该针对我们所面临的可靠性预计的实际需求来进行调整应用。

四、需要完善、改进的地方:

1.针对少量数据样本的应用。

2.针对批量产品进行预计。

五、会议现场:

六、国内外相关技术前沿:

参考文献:

[1] X. Yue et al., “Domain randomization and pyramid consistency: Simulation-to-real generalization without accessing target domain data,” in ICCV, 2019, pp. 2100–2110.

[2] S. Shankar et al., “Generalizing across domains via cross-gradient training,” in ICLR, 2018.

[3] R. Volpi et al., “Generalizing to unseen domains via adversarial data augmentation,” in NeurIPS, 2018, pp. 5334–5344.

[4] D. P. Kingma and M. Welling, “Auto-encoding variational bayes,” arXiv:1312.6114, 2013.

[5] I. J. Goodfellow et al., “Generative adversarial networks,” in NIPS, 2014.

[6] H. Zhang, M. Cisse, Y. N. Dauphin, and D. Lopez-Paz, “mixup: Beyond empirical risk minimization,” in ICLR, 2018.

[7] G. Blanchard, G. Lee, and C. Scott, “Generalizing from several related classification tasks to a new unlabeled sample,” in NeurIPS, 2011, pp. 2178–2186.

[8] K. Muandet, D. Balduzzi, and B. Sch¨olkopf, “Domain generalization via invariant feature representation,” in ICML, 2013, pp. 10–18.

[9] M. Ghifary et al., “Scatter component analysis: A unified framework for domain adaptation and domain generalization,” IEEE TPAMI, vol. 39, no. 7, pp. 1414–1430, 2016.

[10] S. J. Pan, I. Tsang, J. T. Kwok, and Q. Yang, “Domain adaptation via transfer component analysis,” IEEE TNN, vol. 22, pp. 199–210, 2011

[11] Y. Ganin and V. Lempitsky, “Unsupervised domain adaptation by backpropagation,” in ICML, 2015, pp. 1180–1189.

[12] Jin, Xin, et al. "Style Normalization and Restitution for DomainGeneralization and Adaptation." arXiv preprint arXiv:2101.00588 (2021).

[13] M. Arjovsky, L. Bottou, I. Gulrajani, and D. Lopez-Paz, “Invariant risk minimization,” arXiv preprint arXiv:1907.02893, 2019.

[14] M. Ilse, J. M. Tomczak, C. Louizos, and M. Welling, “Diva: Domain invariant variational autoencoders,” in Proceedings of the Third Conference on Medical Imaging with Deep Learning, 2020.

[15] D. Li, Y. Yang, Y.-Z. Song, and T. M. Hospedales, “Learning to generalize: Meta-learning for domain generalization,” in AAAI, 2018.

[16] Y. Li, Y. Yang, W. Zhou, and T. M. Hospedales, “Feature-critic networks for heterogeneous domain generalization,” in ICML, 2019.

[17] M. Mancini, S. R. Bul`o, B. Caputo, and E. Ricci, “Best sources forward: domain generalization through source-specific nets,” in ICIP, 2018, pp. 1353–1357.

[18] M. Seg`u, A. Tonioni, and F. Tombari, “Batch normalization embeddings for deep domain generalization,” arXiv preprint arXiv:2011.12672, 2020.

[19] A. D’Innocente and B. Caputo, “Domain generalization with domainspecific aggregation modules,” in German Conference on Pattern Recognition. Springer, 2018, pp. 187–198.